OpenAI’s latest creation, ChatGPT-4o, isn’t just an upgrade; it’s a shot across the bow of the AI world. The “o” stands for “omni,” signifying a model that transcends the limitations of text-only AI. This is a world where machines not only understand our words but also decipher the subtle cues in our voices and the visual tapestry of our world. It’s both exhilarating and, frankly, a little terrifying.

Multimodal of Madness

Everyone’s throwing around “multimodal” like it’s the latest Silicon Valley buzzword. But what does it really mean for AI? Previous attempts, even those claiming audio and visual capabilities, relied on a patchwork of separate models cobbled together. Imagine a team of translators trying to have a conversation – slow, clunky, and prone to misinterpretations.

ChatGPT-4o throws that fragmented approach out the window. It’s built from the ground up to process text, audio, and images natively. Think of it as an AI with its own senses, capable of experiencing the world in a much richer, more nuanced way than its predecessors.

This native processing is the key to its jaw-dropping speed. Remember those awkward pauses waiting for GPT-4 to synthesize your voice? With an average response time of 320 milliseconds, ChatGPT-4o is faster than your morning coffee kicking in.

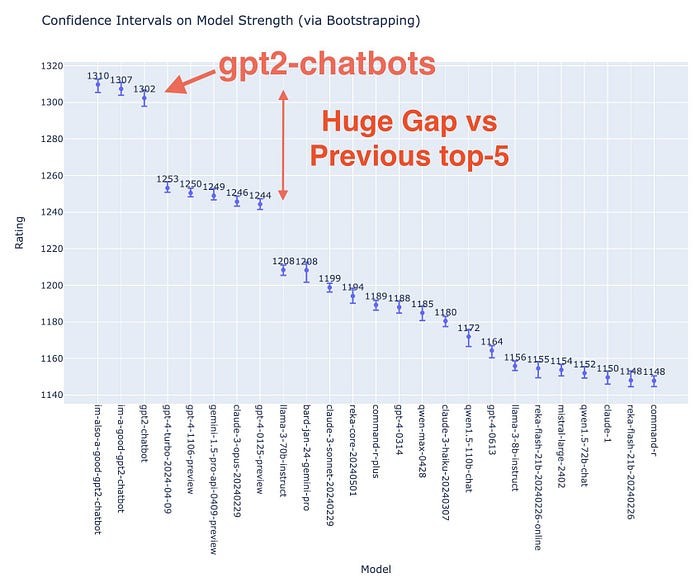

ChatGPT-4o comes on top 66% of times against the previous state-of-the-art.

But it’s not just about speed; it’s about the depth of understanding. OpenAI’s demos showcase an AI that can distinguish between multiple speakers in a crowded room, recognise the emotional undertones in your voice, and even pick up on subtle breathing patterns.

Looking into the Architecture

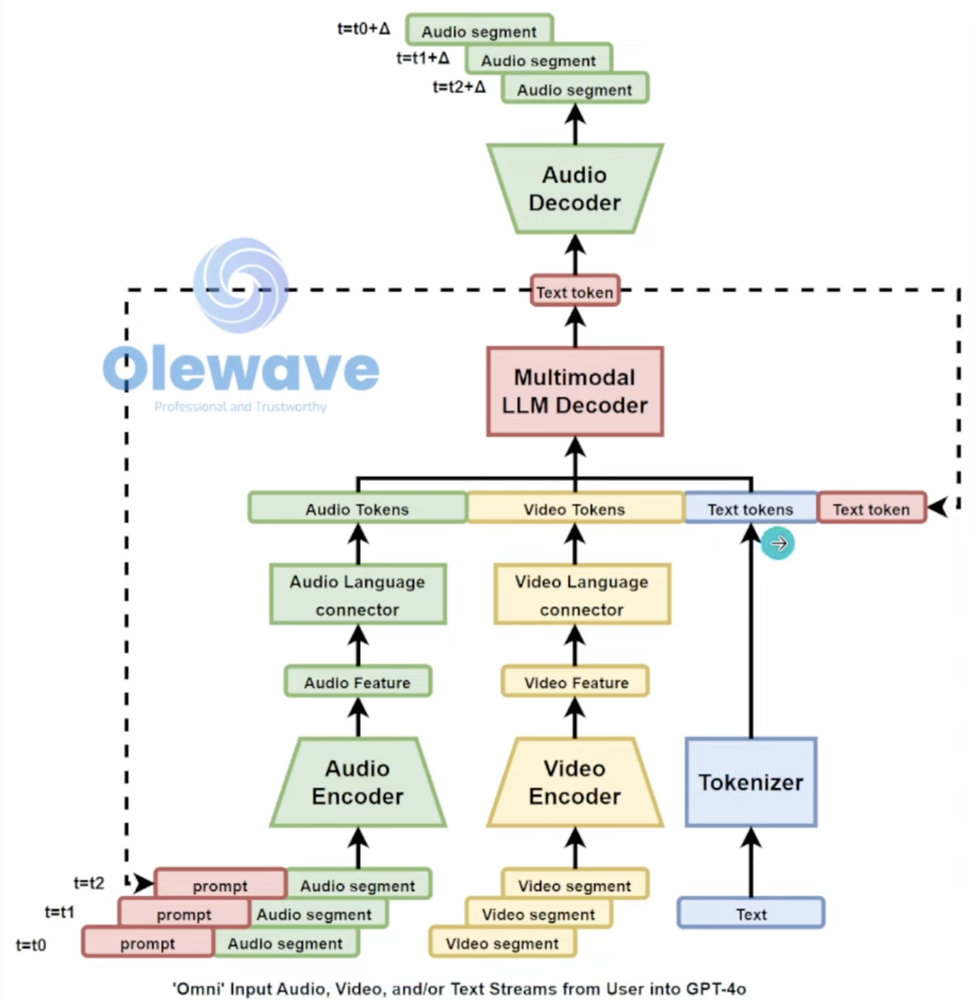

OpenAI is notoriously tight-lipped about their architectural secrets. However, based on the capabilities showcased, we can make a few educated guesses.

First, the API itself has to have undergone a radical transformation. The existing ChatGPT API, limited to text and image input/output, simply wouldn’t cut it for real-time audio and video processing. We’re likely looking at an entirely new API designed for streaming data input and output.

Speculative ChatGPT-4o’s Model Design by Olewave

Second, the model’s internal structure must be fundamentally different. Instead of separate modules for text, audio, and vision, ChatGPT-4o likely employs a unified architecture where these modalities are deeply intertwined. This would allow for the kind of cross-modal understanding we see in the demos, where the AI can seamlessly connect what it hears to what it sees.

Reimagining Human-Computer Interaction

The implications of ChatGPT-4o’s capabilities are far-reaching, touching everything from customer service to creative arts.

The Rise of Hyper-Personalized AI Assistants: Imagine an AI assistant that not only manages your schedule but also picks up on your mood based on your voice and adapts its communication style accordingly.

Revolutionizing Education and Accessibility: ChatGPT-4o could empower students with real-time translation, personalized learning experiences, and interactive learning environments. For individuals with disabilities, it could provide a more intuitive and accessible way to interact with technology.

The Next Generation of Content Creation: Imagine a world where AI can generate music based on a painting you describe or create photorealistic videos from your wildest dreams. The possibilities for creative collaboration are limitless.

The Ethical Tightrope, Navigating Omni-Sensory AI

But with great power comes great responsibility. ChatGPT-4o’s realism and responsiveness raise a host of ethical concerns:

The Rise of Deepfakes and Misinformation: The potential for malicious actors to create incredibly convincing fake audio and video content is a serious concern. We need to develop robust detection mechanisms and promote media literacy.

The Blurring Lines of Reality: As AI becomes more human-like, it’s easy to form emotional attachments and forget that we’re interacting with a machine. This raises questions about the ethics of AI companionship and the potential for manipulation.

The Economic Fallout: Let’s not sugarcoat it – jobs are at stake. Any task that relies heavily on human interaction and basic cognitive skills is vulnerable to automation by AI like ChatGPT-4o. We need to start thinking about upskilling and retraining programs for the workforce of the future.

ChatGPT-4o isn’t just another step forward; it’s a leap into uncharted territory. We’re witnessing the dawn of a new era, where AI is not merely a tool but a partner in our lives. This is both exhilarating and daunting. We have a responsibility to guide the development of omni-sensory AI ethically and ensure it benefits all of humanity, not just a select few.

The future of AI is here, and it’s listening.

Don’t forget to follow me and subscribe to my newsletter for more such interesting reads - https://lnkd.in/gbme5JMt